Oceanographer John Orcutt Elected to National Academy of Engineering

San Diego, Feb. 22, 2011 -- Longtime Calit2 participant John Orcutt, a Distinguished Professor of Geophysics at UC San Diego’s Scripps Institution of Oceanography, has been elected to the National Academy of Engineering. The academy recognized Orcutt "for international leadership in development of new ocean-observing infrastructure and environmental and geophysics research."

|

The chief scientist on more than 20 oceanographic expeditions, Orcutt’s areas of research are marine seismology applied to both crustal and mantle structure, particularly seismic tomography, theoretical seismology, and the exploitation of information technology for the collection and processing of real-time environmental data.

He received his Bachelor’s degree in math and physics from the U.S. Naval Academy at Annapolis, and received a Fulbright fellowship to study in England, where he earned a Master’s degree in physical chemistry at the University of Liverpool. Orcutt’s Ph.D. in 1976 was in earth sciences from Scripps, where he has been a professor since 1982. In this Q&A, he looks back at his 40 years at UCSD, and project that will keep him busy for years to come:

|

A. I have had a lot of experience through the years with engineering. I finished my career in the Navy as the chief engineer on a submarine named the Kamehameha. Then at Scripps I began to operate seismographs in an ocean environment. In oceanography we’ve had to build our own instruments for making measurements in the oceans, and the things we’ve learned in the oceans have been largely because of engineering solutions that we built to explore the oceans. And the Ocean Observatories Initiative Cyberinfrastructure project I am now leading is another big engineering undertaking. So sometimes it’s hard to discern what is engineering and what is science.

Q. You grew up on a ranch in Colorado. What accounts for your career in science and engineering?

A. I grew up in the Sputnik era, in the 1950’s, and that had a huge impact on me and what I decided to do with my life and career, as it did for many people. That was a moment in history that made a difference to lots of people who went into scientific careers and engineering, and people allude to that today – that we need a new approach to make engineering, science and education more interesting to young people. That’s basically how I wound up doing what I did through life.

|

A. There was a very close tie between my research in seismology and the Advanced Research Projects Agency, or ARPA, particularly in the application of seismology to monitoring test-ban treaties. Seismology is an awfully good way to detect large explosions on Earth and therefore to monitor treaties (‘trust but verify,’ if you will). Around that time ARPA also funded a number of groups to build a network that would link investigators it funded. It became known as ARPAnet, and email was invented as a way to communicate from one university to the next. This all happened in the 1970s, and being able to send emails and small files back and forth was really quite a revolution. You’d sit down and turn on a big cathode ray tube, then send data and information back and forth. It was great fun to live through that and see how it all began, and now to be able to use the Internet today. I was definitely an early adopter, and a number of my colleagues at Scripps adopted the Net without hesitation.

Q. You are also a scientist in the San Diego Supercomputer Center. How has high-performance computing and advanced networking changed your research?

A. When I first started doing theoretical seismology, we pretty much dealt with the wave equation and we found asymptotic solutions to problems that we could compute, essentially, with calculators and early computers, and write the solutions in closed form on a pile of paper. That’s almost disappeared now. We’ve developed a lot of physical understanding about the way waves propagate on Earth, but today with the ability to do numerical calculations to solve the wave equation completely with discreet computations means that we’ve changed the science completely. So we’re able to simulate models of Earth that really do fully replicate in many ways the data that we’re collecting on the face of the Earth. It gives us a lot higher resolution, a better understanding of earthquakes, nuclear sources, tsunamis, all things that are much more predictable than they were in the 70s and earlier, when all the work had to be done with approximations using pencil and paper – lots and lots of paper. So it changed everything. The engineering, the Internet, computers, the increasing density of transistors on a silicon chip, all contributed to this ability to think about how we actually compute and how we predict the future. It’s become a big deal in climate, for example. You can take an entire planet now, and discretize that planet and make calculations of global circulation models. You can model the impacts of increasing temperatures and gasses on the atmosphere at better and better resolution, and faster and faster as time goes on. We can see a time in the next decade or two decades when the applicability of these very, very complex models to the climate problem will be possible.

|

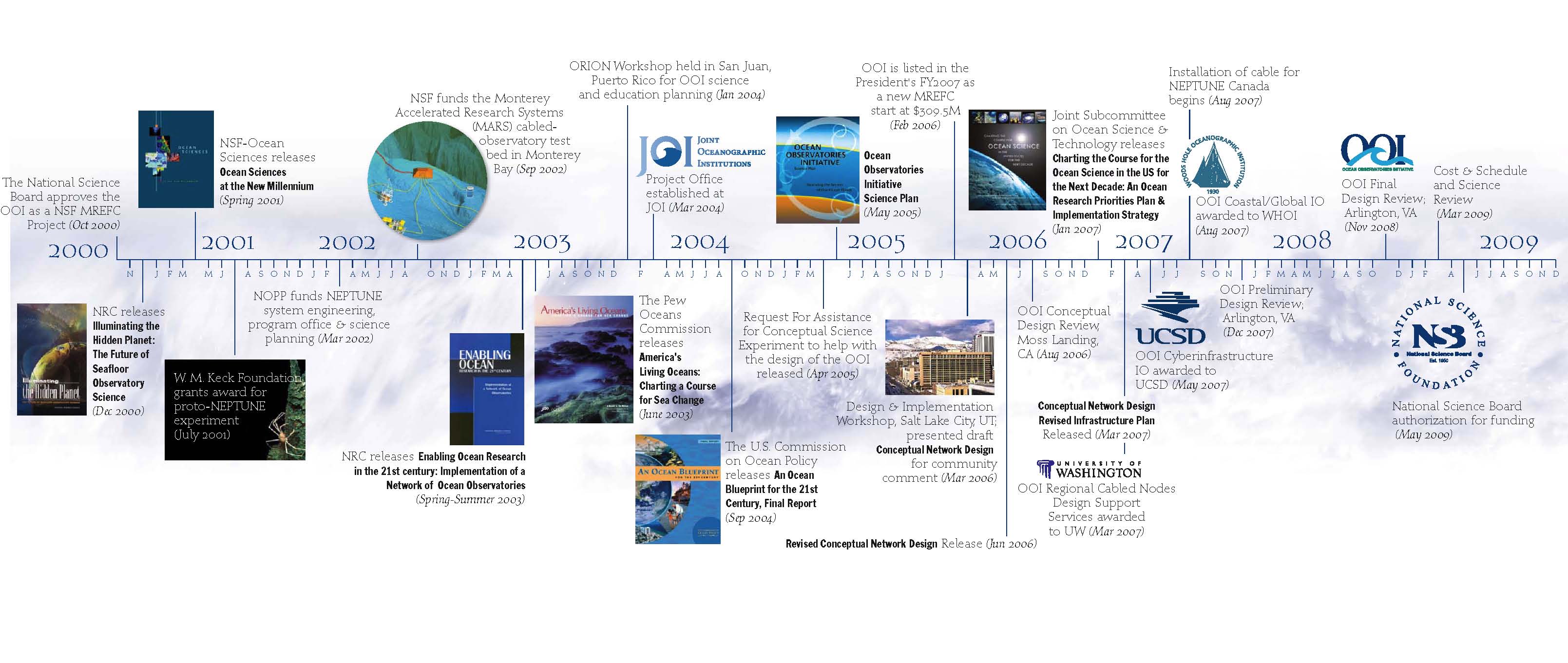

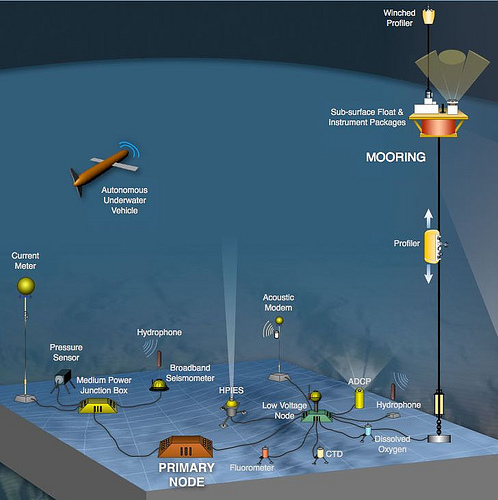

A. I can remember exactly when it started in many ways. A group of us organized a workshop in Woods Hole in 1987 and called for building a global network of observatories. That was nearly 25 years ago. It began really as a seismic program, to put a lot of seismometers around the planet, but over time a lot of other scientific disciplines were integrated into the program. Now the OOI is a broad, multidisciplinary study of the Earth. The program was funded about a year and a half ago, but leading up to that there was a lot of planning. We had a series of committees and eventually the NSF decided to provide funding for one of these committees, which after the course of six or seven years, developed a plan and a number of studies for the National Academies on how you would make these kinds of observations. The National Science Board approved the new program at a level of about $400 million to construct, over a five-year period, a global observing system in the oceans. It’s not as large and comprehensive as we dreamed about in the ’80s, but it provides all the tools necessary to build, over the coming decades, major capability to make the kinds of measurements we need. The NSF has agreed to fund this for 25-30 years to get this going, not just the five-year investment that’s being made right now in construction, but the growth, operation and maintenance of that system. So the four initial global sites will grow to many more. There are much more closely spaced observatories on the East coast south of Massachusetts and the West coast off Oregon and Washington to make coastal environment measurements as well. The real kingpin is a fiber-optic cable off the coast of Washington and Oregon that runs along the sea floor, transmitting at speeds of 10 gigabits per second to send data back from a bunch of sensors as well as to send commands to those sensors on the sea floor, while also supplying kilowatts of power to operate cameras, lights and sophisticated instruments that are being built.

|

A. We’re working on integrating all the various platforms and sensors that are in this global network. Our goal is to make the user aware simply of the data coming from instruments rather than the details of the instruments and platforms themselves. The other OOI groups have to put things in the ocean that will support these measurements, but our reach with the cyberinfrastructure is clear down to the sensor itself, so that every individual sensor, every individual platform, can be addressed by the cyberinfrastructure. We call it the Integrated Ocean Network, and it represents the kind of network that extends out to the ends of the observatories at all different scales, so that when the data are returned – and the data are all returned in real time, with latencies of seconds in many cases – the data are immediately available on an open basis to researchers. Scientists around the world can subscribe to the sensors of interest and build their own private observatories from feeds from these various instruments. I guess the analogy might be subscribing to a podcast from the iTunes store. And the data will be available to scientists and educators around the world. In that sense, it is like the Large Hadron Collider project: a lot of the analysis of those data from the Collider are done in the U.S. even though the Collider is located below the Franco-Swiss border. So we envision the same kind of approach. There will be no closed data in the system, with one exception: someone building a new instrument will be able to operate the instrument and test it for a year’s time before the data will be streamed live to everybody. For the five-year construction, we’re putting sensors in the ocean, and as soon as they’re turned on, everyone will have access. I hope that it will provide the kind of information from the oceans that will foster a lot more education in the way the oceans work.

|

A. An oceanographer will often take an instrument to sea – I do it myself – put it down for 15 months, then come back and collect it. With a streaming data set from the OOI, you have to accept this infinitely long data stream in real time and do something with it. You may want to do some analysis, so you have to build a computer program and the technology to be able to compute on a continuous stream, rather than on files. So it represents new challenges in information technology, not just in how you operate a big global network of sensors. Seismologists do this kind of thing. The deputy director on our OOI project, Frank Vernon, runs a big network of NSF seismometers, and another large program called Earthscope, with 500 stations in the U.S. that stream data in real time. So it’s not unheard of, but for this broad range of data, covering many heterogeneous kinds of disciplines, this is a new departure and a real challenge. Over the next 25 to 30 years, we have to keep the program scalable so that adding new sensors is not overwhelmingly expensive. We have to make it simple and straightforward to add new sensors, but that means an awful lot of thought and planning about how the interfaces work on these things, how you test them before you bring them to sea, and so on. The Integrated Ocean Network will bring lots of data to shore, and send stuff back out to sea to control it, without physically going there in a ship.

Q. Won’t this require a change in mind-set on the part of oceanographers who will be primarily using the data, not collecting it?

A. It’s a very good thing because it expands your capability to make measurements, but most importantly it expands the scientific audience, the educational audience that actually use the data. Generally when you have an individual scientist going to sea, they go to sea, collect the data, write papers on it and then, all too often, it basically disappears. Maybe it’s on a disk drive somewhere, but that doesn’t help much. So a lot of the investment the country makes in the individual experiments is used, but it’s sometimes a lot less expensive to go out and recollect the data than it is to find the old data and re-use those. So it changes the way we think about how we can do science. But there is no tradition of doing oceanography in this fashion, so there is naturally a cultural resistance to doing this kind of work. It’s a paradigm change in the way we think about doing oceanography. It does provide an entirely new tool that was previously unavailable. The NSF and we are taking a gamble that this kind of approach to doing oceanography will work.

Q. What is the current timetable for bringing the OOI system on line?

A. We are bound to have the whole program running in 3 ½ to 4 years. Then the funding for construction is over and we have to pick up the operation for subsequent years through a different budget for operations and maintenance. So we have maybe another four years at most to finish the construction. My life will become even more intense, and for all the people who work with us here, the time will get even more compressed and busier and busier. We’re using all the standard systems engineering approaches and reporting and documentation and infinite numbers of teleconferences to build this system. So lots and lots of the time and money are going into the planning effort as much as building the instruments themselves. So once that’s done, we will have a tool-set available in the Integrated Ocean Network from which we’ll be able to expand substantially the scope of what we’re measuring on Earth.

Related Links

Media Contacts

Doug Ramsey, 858-822-5825, dramsey@ucsd.edu