UC San Diego's 'Virtualize Me' Creates Look-alike Avatars in Real-time

October 11, 2011 / By Tiffany Fox, (858) 246-0353, tfox@ucsd.edu

San Diego, Calif., Oct. 11, 2011 — If a tree falls in the virtual world and there’s no one there to write the code, does it make a sound?

|

The answer, of course, is no, and that can pose a frustrating disconnect for users of virtual reality platforms like Second Life, where such time-space hiccups also extend into the visual realm. Virtual reality aficionados want their avatars to seamlessly mimic their own actions in real-time, without the need for real-time coding or the use of cumbersome motion-capture sensors .

By exploiting the capabilities of modern graphics cards, University of California, San Diego computer scientist Daniel Knoblauch has developed a fully immersive virtual reality experience with a resulting avatar that looks like the user and does what the user does, precisely when the user does it.

“If you smile in Second Life, no one can see you smiling unless you input that as code,” says Knoblauch, a recent Ph.D. in UCSD’s Department of Computer Science and Engineering and a researcher with the UCSD division of the California Institute for Telecommunications and Information Technology (Calit2). “In our system, the way you look and everything you do is captured in real time.”

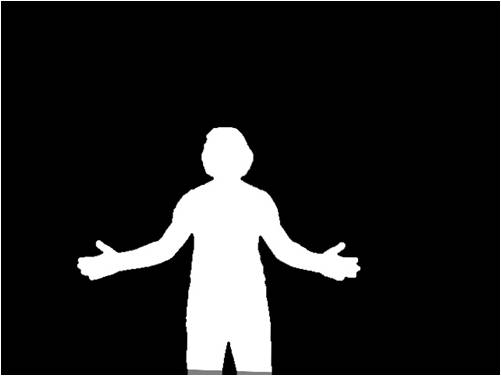

To create the system — dubbed “Virtualize Me” — Knoblauch tweaked an existing algorithm for reconstructing what’s known as volumetric visual hull. His technique makes it possible to use a flexible, focused grid of ‘voxels,’ or 3D pixels, to reconstruct the target object with higher spatial resolution while also extracting information from the foreground and background of the input images.

|

In other words, “Virtualize Me” re-creates high-resolution, realistic 3D imagery — right down to the color and texture of one’s clothing — that renders at faster speeds with no delay.

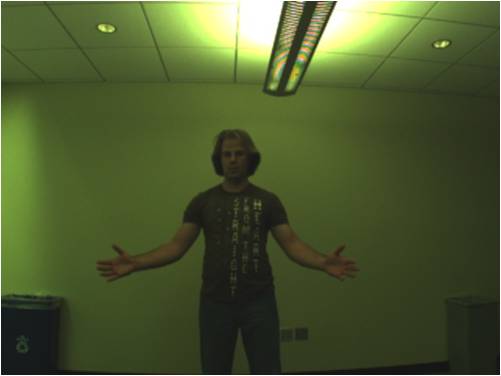

To experience the ‘virtualization’ experience myself, I stepped inside a non-descript room at Calit2’s headquarters in Atkinson Hall, where eight Point Grey cameras – which stream data at 30 frames per second – were mounted on the surrounding four walls. After Knoblauch punched a few commands onto his keyboard, it was only a matter of seconds before the cameras captured my image and my avatar appeared on the computer monitor, complete with the ponytail, red dress and high heels I was wearing.

My avatar was standing in a virtual room with gray walls and checkerboard floors, and as I moved, she moved. Suddenly, a shower of particles appeared in front of my avatar, and as I waved my arms to interact with it, my virtual persona followed suit with less than a second delay. I was also able to virtually ‘spin’ and interact with a cube that was covered with images of foreign destinations – a touchscreen-like tool Knoblauch says could eventually be used for virtual tourism.

|

“The particles you just touched are interacting with virtual gravity, in other words.”

Although I was still well aware that I was standing in a room watching my avatar on a screen, Knoblauch says that a completely immersive experience — where I would feel as though I actually exist in the virtual world — could be achieved via a head-mounted display. The data could even be streamed to Calit2’s fully immersive StarCAVE, where my avatar could interact with the virtual world in 3D, and Knoblauch says he’s also considering adding audio to enhance the experience.

Knoblauch still has a few kinks to work out. “There is some visual ‘noise’ from the color extraction, because the voxel grid moves slightly even when the target object is not moving and that can cause aliasing effects,” he says, but the system has already caught the attention of long-time Calit2 industry partner Qualcomm, Inc., which hired Knoblauch as a senior engineer in Corporate Research for the Augmented Reality Software Group earlier this month.

Knoblauch says he expects to work on similar projects while at Qualcomm, but even if he doesn’t, he could easily set up Virtualize Me in his own living room. To run the entire system, he needs only three servers (one to reconstruct the data in 3D and two to capture input from the video cameras), network cables, a switch and the cameras themselves, which cost around $300 to $400 each.

Knoblauch’s faculty advisor, Calit2 Professor of Visualization and Virtual Reality Falko Kuester, says Virtualize Me could enhance or even revolutionize the gaming, social networking and telepresence industries.

“If you think about collaborative workspaces for telepresence or beyond, one of the desires is to have face-to-face, lifelike interaction,” he says. “Right now, videoconferencing is just a set of pretty faces looking at each other, but some of the key aspects, like body language, are lost, which makes it extremely difficult to keep people’s attention and keep them from drifting off.

“The focus for Daniel’s research has truly been how to generate the digital object quickly, regardless of what it is. To achieve this, he did everything from designing and developing the imaging system to extracting the avatar itself, texture mapping and coloring the avatar and delivering the avatar to where it should be in space.”

Kuester says one of the system’s most promising features is that it’s ‘agnostic’ in terms of the video cameras used to capture the visual data, meaning it can scale to any camera, even those on a camera phone.

“Modern phone cameras are extremely high-quality,” he adds, “so there are truly exciting opportunities ahead of us in terms of virtualizing space.”

Media Contacts

Tiffany Fox, (858) 246-0353, tfox@ucsd.edu